Lethal autonomous weapon systems (LAWS) represent a pivotal evolution in military technology, enabling weapons to independently identify, target, and neutralize threats without direct human intervention. These systems rely on sophisticated sensors and advanced algorithms to perform critical tasks traditionally requiring human oversight. While they remain in the nascent stages of development, LAWS hold the promise of operating effectively in communications-denied or degraded environments—settings where conventional systems may fail. This capability could redefine the battlefield, offering strategic advantages in scenarios where maintaining human control is impractical or impossible.

Despite widespread speculation, U.S. policy does not prohibit the development or use of LAWS. Although the United States has only recently begun incorporating LAWS into its arsenal, senior military leaders have indicated that future development may be inevitable, especially if adversaries progress in this domain. The geopolitical implications of such advancements underscore a growing urgency to address the ethical, operational, and strategic dimensions of autonomous weaponry. At the same time, international advocacy for regulating or banning LAWS is gaining traction, fueled by concerns over accountability, ethical use, and compliance with international humanitarian law.

Global Implications and Strategic Considerations

The evolution of LAWS technology and the ongoing international discourse surrounding it carry profound implications for U.S. defense strategy. Congressional oversight may need to adapt to ensure alignment between emerging capabilities and ethical considerations. Investment decisions will require balancing technological innovation with the risks and responsibilities inherent in deploying autonomous systems. Additionally, military operational doctrines and treaty negotiations must evolve to address the realities of autonomous warfare, shaping the future conduct of war in an increasingly complex environment, such as in the anti-access area-denial (A2AD) battle spaces of the Indo Pacific and the great power rivalry with China & Russia.

U.S. Policy Framework: Directive 3000.09

The Department of Defense’s policy on autonomy in weapon systems is outlined in Directive 3000.09, first issued in 2012 and most recently updated in 2023. This directive provides a foundational framework for how the United States develops, tests, and deploys autonomous and semi-autonomous systems.

The directive defines LAWS as weapon systems capable of independently selecting and engaging targets once activated, without further human intervention—a concept commonly referred to as “human out of the loop” or full autonomy.

This definition distinguishes LAWS from other categories, such as “human on the loop” systems, where operators retain the ability to monitor and override target engagement, and “human in the loop” systems, which require operators to approve each engagement. Examples of semi-autonomous systems include “fire and forget” guided missiles, which rely on autonomous functions to achieve pre-defined objectives.

Notably, Directive 3000.09 excludes certain systems from its scope, such as unarmed platforms, unguided munitions, and cyberspace tools. This focus ensures the policy addresses only those systems with the potential to autonomously use lethal force, emphasizing the importance of human oversight in critical decision-making processes.

Human Oversight and Ethical Considerations

The directive mandates that all autonomous weapon systems allow commanders and operators to exercise “appropriate levels of human judgment” in their use of force. This requirement underscores the importance of flexibility, as the level of human involvement may vary depending on the weapon system, operational context, and type of warfare. The 2018 U.S. government white paper on this topic emphasizes that human judgment need not equate to manual control but should encompass broader oversight regarding the deployment, objectives, and ethical considerations of these systems.

To facilitate informed decision-making, Directive 3000.09 requires comprehensive training programs, robust tactics and procedures, and intuitive human-machine interfaces. These measures ensure operators understand the system’s capabilities and limitations, enabling them to make sound judgments in high-pressure environments. Furthermore, the directive mandates periodic reviews of these systems to maintain their reliability and alignment with operational goals.

Operational Safeguards and Reliability

A core tenet of the directive is the emphasis on rigorous testing and evaluation to ensure LAWS function as intended in realistic and challenging scenarios. These systems must demonstrate resilience against adversaries employing countermeasures and must complete engagements within predefined operational constraints. Should a system encounter unforeseen circumstances or fail to meet its objectives, it is designed to terminate the engagement or seek additional operator input.

Moreover, any system changes resulting from machine learning or software updates must undergo thorough re-evaluation to confirm that safety features and operational integrity remain intact. This iterative testing process aligns with the Department of Defense’s commitment to ethical AI principles, ensuring that autonomous systems operate responsibly and with minimal risk of unintended consequences.

Balancing Innovation and Accountability

The debate over LAWS extends beyond technical and operational considerations, touching on broader questions of accountability, proportionality, and compliance with the law of war. While some advocate for a preemptive ban on these systems, the U.S. government has argued that emerging technologies could reduce collateral damage and improve precision in military operations. For example, automated target identification and engagement could mitigate civilian casualties in complex conflict zones.

Nonetheless, the United States faces significant challenges in balancing the pursuit of technological superiority with its ethical obligations. The outcomes of international discussions, particularly under the United Nations Convention on Certain Conventional Weapons (UN CCW), could influence U.S. policy and shape global norms surrounding autonomous weapons.

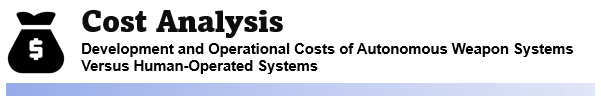

Development Costs of Autonomous UAVs. The cost of developing autonomous UAVs is higher than human-operated drones due to advanced AI systems, sensors, and machine learning algorithms, with a focus on reducing the need for human operators.

Operational Cost Reduction through Automation. Operational costs for autonomous drones are lower than human-operated ones, as they eliminate the need for pilots and reduce maintenance costs associated with human error and crew management.

Cost of Swarming Technologies. While swarm technologies require substantial up-front investment in research and AI, their operational cost per unit drops significantly, as multiple drones can be deployed with fewer human controllers.

AI-Enhanced Ground Vehicles. The development costs of autonomous military ground vehicles are higher than human-operated tanks, due to the need for complex AI software and sensors, but the operational cost is reduced by the elimination of crew and support personnel.

Long-Term Cost Efficiency in AI-Powered Surveillance. Autonomous surveillance systems lower operational costs over time by reducing the number of intelligence-gathering personnel and improving data processing efficiency compared to human-run operations.

Autonomous Combat Vehicles. While the initial cost of development for autonomous combat vehicles (e.g., tanks, armed drones) is steep due to research and AI integration, operational costs decrease with reduced human manpower, fewer training needs, and longer vehicle uptime without human intervention.

Cost of Autonomous Cyber Warfare Units. The operational costs of autonomous cyber units are lower than traditional human-run cyber operations, as they can operate continuously without rest and scale easily, though the development costs remain high due to the sophisticated software required.

Cost Reduction in Replacing Human Pilots. Development costs are initially high to integrate autonomous flight control, but operational costs fall due to reduced personnel for piloting, mission planning, and risk mitigation in combat zones.

Cost Efficiency in Maritime Autonomous Systems. Although the initial development cost for autonomous ships is comparable to or higher than human-operated ships, their operational cost is much lower due to fewer crew members, reduced fuel consumption, and less maintenance for non-human-operated systems.

Decreased Maintenance and Training Costs. Autonomous systems can significantly reduce the need for human maintenance staff and training programs, which results in long-term savings compared to human-operated systems requiring ongoing personnel training and equipment upkeep.

Scalability and Cost Per Unit in Large-Scale Deployments. The cost per unit for autonomous systems becomes competitive as mass production lowers costs, and they offer better scalability than human-operated systems, which require more complex logistical support and a larger workforce.

Comparative Cost of Autonomous Strategic Missile Systems. The development cost of autonomous strategic weapons remains high due to the complex guidance and AI systems, but operational costs decrease as these systems can be deployed without the need for human strategic decision-makers or manual launches, reducing personnel and operational inefficiencies.

Weapons System Review Process

Under Directive 3000.09, all semi-autonomous and autonomous weapon systems undergo rigorous testing and evaluation to ensure operational reliability. These systems must demonstrate their ability to perform as intended in realistic scenarios, effectively counter adaptive adversaries, and operate within designated timeframes and geographic constraints while aligning with commander objectives. If a system cannot meet these standards, it must either terminate the engagement or seek additional operator input before proceeding.

To minimize risks, these systems must be designed with robust safeguards to reduce the likelihood and consequences of failures. Any modifications to their operating state, including changes from machine learning or software updates, must undergo renewed testing to confirm they retain safety features and operational integrity. Consistent with the Department of Defense’s (DOD) AI Ethical Principles, all AI-enabled capabilities must prioritize responsible and ethical use.

Higher Level Review

In addition to standard review protocols, a secondary senior-level approval process is mandated for autonomous and semi-autonomous systems. This requires formal endorsements from the Under Secretary of Defense for Policy (USD[P]), the Vice Chairman of the Joint Chiefs of Staff (VCJCS), and the Under Secretary of Defense for Research and Engineering (USD[R&E]) before a system enters development. Approval from USD(P), VCJCS, and the Under Secretary of Defense for Acquisition and Sustainment (USD[A&S]) is required prior to field deployment.

In urgent scenarios, the Deputy Secretary of Defense may waive this senior-level review. Furthermore, an Autonomous Weapon System Working Group—comprising representatives from relevant DOD offices—provides advisory support for these reviews, ensuring a thorough evaluation of the system’s readiness and alignment with policy guidelines.

Notification To Congress

Per the FY2024 National Defense Authorization Act (NDAA), the Secretary of Defense must inform congressional defense committees of any updates to Directive 3000.09 within 30 days, including explanations for changes. Additionally, Section 1066 of the FY2025 NDAA mandates annual reports on the approval and deployment of lethal autonomous weapon systems through 2029. These measures ensure congressional oversight and transparency in the development and use of autonomous systems.

LAWS in International Discussions

The United States has actively participated in international discussions on lethal autonomous weapon systems (LAWS) under the United Nations Convention on Certain Conventional Weapons (UN CCW) since 2014. In 2017, these discussions formalized into the Group of Governmental Experts (GGE), tasked with addressing the technological, ethical, military, and legal dimensions of LAWS.

Approximately 30 countries and 165 nongovernmental organizations have called for a preemptive ban on LAWS due to ethical concerns.

Proposals from 2018 and 2019 have focused on political declarations and regulatory frameworks. Ethical concerns, such as accountability, operational risks, and compliance with international humanitarian law, have driven calls from 30 nations and 165 nongovernmental organizations for a preemptive ban on LAWS. However, the U.S. opposes such bans, arguing in a 2018 white paper that autonomous technologies could reduce collateral damage and enhance precision in military engagements.

While the UN CCW operates on consensus, its outcomes could significantly influence U.S. policy on autonomous weapons, shaping future norms and practices in this rapidly advancing field.

Top Six Takeaways

(1) LAWS must undergo rigorous evaluations to ensure reliability, minimize risks, and align with operational goals. Any updates to their software or learning algorithms necessitate re-evaluation.

(2) All autonomous systems must adhere to the DOD’s AI Ethical Principles, prioritizing accountability and safety in design and deployment.

(3) A senior-level review involving top DOD officials is required for the development and deployment of LAWS, with exceptions for urgent military needs.

(4) Legislative measures mandate timely updates and annual reports on LAWS development to ensure transparency and accountability in policy and deployment.

(5) Global discussions on LAWS, particularly under the UN CCW, highlight divergent views, with some nations advocating a ban and the U.S. emphasizing the benefits of precision technologies.

(6) The U.S. faces challenges in balancing the pursuit of technological innovation with ethical concerns and international norms, while maintaining its competitive edge in autonomous warfare.

Conclusion

Lethal autonomous weapon systems (LAWS) are poised to redefine the nature of warfare, placing the United States at a critical juncture of technological innovation and ethical responsibility. These systems, while promising unprecedented operational capabilities, bring significant challenges that demand a thoughtful and adaptive policy approach. The rapid pace of advancements in autonomous technologies requires the U.S. to balance its strategic objectives with global norms and ethical imperatives, ensuring that innovation is pursued responsibly.

Directive 3000.09 offers a robust framework for managing the development and deployment of autonomous systems, emphasizing the critical role of human oversight, rigorous testing, and adherence to ethical principles. However, as the scope and capabilities of LAWS expand, the policy must evolve to address emerging concerns and maintain alignment with operational realities and international standards.

The global debate over LAWS, particularly within forums like the United Nations Convention on Certain Conventional Weapons (UN CCW), highlights the growing pressure for regulation and accountability. While some nations and advocacy groups push for a preemptive ban, the U.S. has championed the potential humanitarian benefits of precision-guided autonomous technologies, framing them as tools for reducing collateral damage and enhancing mission effectiveness.

Navigating this complex landscape requires a multifaceted approach. Congressional oversight, informed by rigorous analysis and stakeholder input, will play a vital role in shaping the future of LAWS policy. Investments in counter-autonomy capabilities and international collaboration will be equally critical in ensuring that the U.S. remains at the forefront of this transformative domain.

Ultimately, the intersection of autonomy, ethics, and global security presents a defining challenge for the 21st century. By leveraging its technological prowess and commitment to responsible innovation, the United States has an opportunity to lead not only in the development of LAWS but also in setting the standards for their ethical use. Directive 3000.09 is a step in the right direction, but its continued refinement will be essential to addressing the dynamic realities of autonomous warfare. The future of conflict—and the principles governing it—hangs in the balance.

Acknowledgements and Image Credits

{1} LAWS of War: A Concise Guide to DoD Policy on Lethal Autonomy. Image Credit: PWK International Advisers. 01 Jan 2025

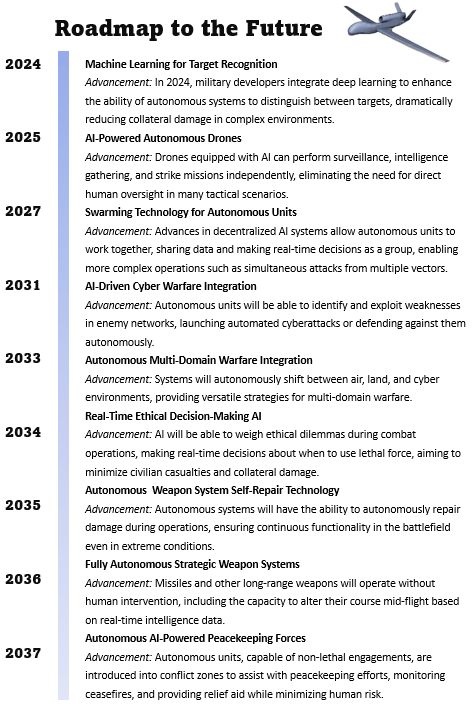

{2} Roadmap to the future of LAWS. Image Credit: PWK International Advisers. 01 Jan 2025

{3} Human Oversight and Ethical Considerations. Image Credit: PWK International Advisers. 02 Jan 2025

{4} Incidents and Ethical Concerns. Source and Credit: PWK International Advisers. 03 Jan 2025

{5} Lethal Autonomy Cost Analysis. Source and Credit: PWK International Advisers. 04 Jan 2025

{6} Under Directive 3000.09, all semi-autonomous and autonomous weapon systems undergo rigorous testing and evaluation to ensure operational reliability. Image Credits: PWK International Advisers. 05 Jan 2025

{7} The Business of LAWS. Our unbiased analysis includes mention of specific autonomous weapon systems companies and their electro mechanical innovations. All registered trademarks and trade names are the property of the respective owners.

{8} Approximately 30 countries and 165 nongovernmental organizations have called for a preemptive ban on LAWS due to ethical concerns. Image Credit: PWK International Advisers. 05 Jan 2025

{9} Lethal autonomous weapon systems (LAWS) are poised to redefine the nature of warfare, placing the United States at a critical juncture of technological innovation and ethical responsibility. Image Credit: PWK International Advisers. 05 Jan 2025

About PWK International Advisers

PWK International provides national security consulting and advisory services to clients including Hedge Funds, Financial Analysts, Investment Bankers, Entrepreneurs, Law Firms, Non-profits, Private Corporations, Technology Startups, Foreign Governments, Embassies & Defense Attaché’s, Humanitarian Aid organizations and more.

Services include telephone consultations, analytics & requirements, technology architectures, acquisition strategies, best practice blue prints and roadmaps, expert witness support, and more.

From cognitive partnerships, cyber security, data visualization and mission systems engineering, we bring insights from our direct experience with the U.S. Government and recommend bold plans that take calculated risks to deliver winning strategies in the national security and intelligence sector. PWK International – Your Mission, Assured.

Pingback: Forever Wars and the Authorization for use of Military Force |

Pingback: AI in the Cockpit: The Rise of Autonomous Wingmen |