In the annals of art history, few events are as notorious and enigmatic as the Isabella Stewart Gardner art heist of 1990. The theft of thirteen priceless artworks, including masterpieces by the likes of Vermeer and Rembrandt, from Boston’s Gardner Museum sent shockwaves through the art world, leaving a mystery that remains unsolved to this day. But as we stand on the precipice of 2026, a new kind of art theft is underway—one that doesn’t involve masked intruders slipping through museum security, but rather algorithms quietly siphoning off the essence of creativity itself.

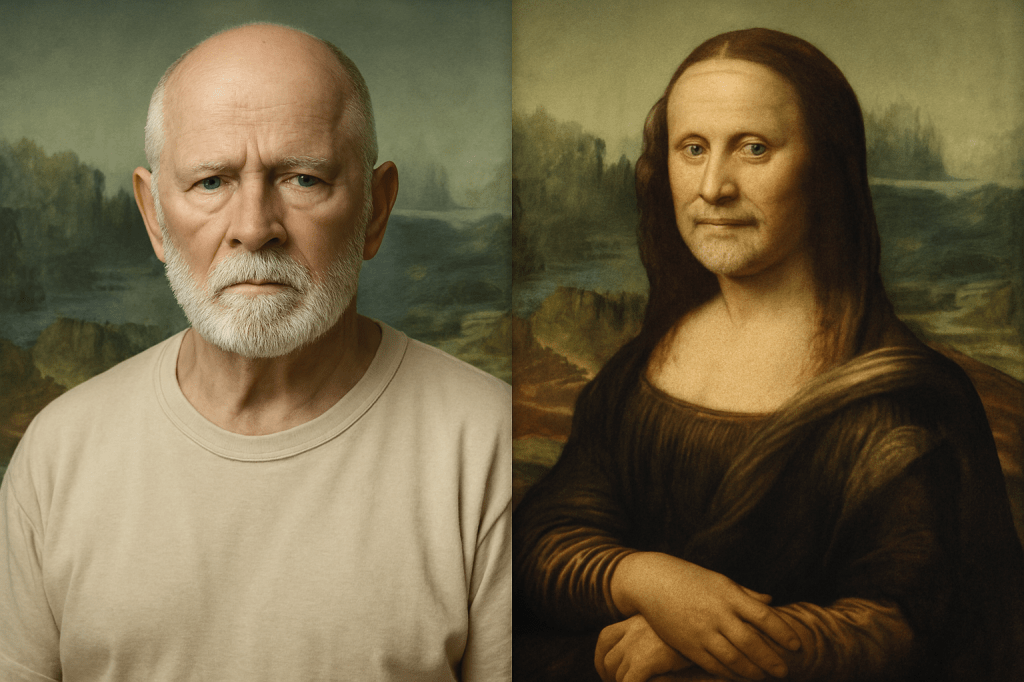

James “Whitey” Bulger, the infamous South Boston gangster with roots buried deep in the city’s tough streets, carved his legend through a chilling mix of brutality, cunning, and charisma. Raised in the Old Harbor housing projects, Bulger’s life mirrored the city’s own gritty transformation. While his official rap sheet included extortion, drug trafficking, and murder, many believe Bulger’s shadow extended even further—into the hallowed halls of the Isabella Stewart Gardner Museum. In the early hours of March 18, 1990, two men dressed as Boston police officers executed what remains the largest art heist in history, vanishing with 13 masterpieces worth over $500 million. Though never officially charged, Bulger’s proximity to the criminal underground, his ties to those capable of orchestrating such a theft, and his rumored interest in valuable leverage point to him as a prime suspect in the decades-long mystery.

Rumors persist that Bulger used the stolen artwork—paintings by Vermeer, Rembrandt, and Degas—as a form of underground currency or bargaining chip in his complicated dance with law enforcement and the mob. Some allege that pieces of the Gardner collection moved through safehouses in Southie, hidden behind false walls or beneath floorboards while the FBI played catch-up. Whether or not Bulger was the mastermind behind the theft, the idea adds another layer to his already mythic persona—a man who straddled the line between street thug and sophisticated operator. His life, like the missing paintings, remains a puzzle: part truth, part legend, all Boston.

In the age of artificial intelligence, the boundaries between artistry and technology have become increasingly porous. AI image models, trained on vast datasets of both commercial and artistic imagery, are now capable of generating astonishingly realistic works of art, blurring the lines between the human hand and the digital realm. However, as these AI-generated creations proliferate across the internet, questions of ownership and copyright infringement have come to the forefront, challenging traditional notions of artistic property rights.

This article aims to explore the intersection of art, technology, and intellectual property in the era of AI. We’ll explore how the democratization of image creation through AI diffusion technology has not only revolutionized the art world but also disrupted established copyright norms, creating an unregulated arena where the boundaries of ownership are increasingly ambiguous.

The rise of AI image models and their use in both commercial and artistic spheres.

The proliferation of AI image models marks a paradigm shift in the creation and dissemination of visual content. These sophisticated algorithms are capable of analyzing vast troves of data, learning intricate patterns, and ultimately generating images that rival those crafted by human hands. Initially developed for practical applications such as image recognition and classification, AI image models have since found their way into the realm of art and creativity. Artists and technologists alike have embraced these tools as a means of exploring new frontiers of expression, pushing the boundaries of what is possible in the visual arts.

Simultaneously, AI image models have sparked a renaissance in the world of fine art. Artists are harnessing the power of these algorithms to experiment with new techniques and styles, blurring the boundaries between traditional mediums and digital innovation. Whether creating surreal landscapes, abstract compositions, or hyper-realistic portraits, AI-driven artists are pushing the envelope of what defines contemporary art, challenging audiences to reconsider preconceived notions of authorship and authenticity. Yet, as AI-generated artworks gain recognition and acclaim, questions linger about the role of the human artist in an increasingly automated landscape and the ethical implications of algorithmic creativity.

Top 10 Image Tools in 2025 for

Journalists, Educators, Artists and Content Creators

Each of these tools empowers creators to scale visual production, generate unique media assets, and push the boundaries of storytelling—all from a laptop or smartphone.

OpenAI (San Francisco, USA) – DALL·E 3

Available via ChatGPT and Bing, DALL·E 3 excels at generating highly detailed images from text prompts and even allows inpainting (editing parts of images). It’s great for article visuals, cover art, and social media graphics with fast turnaround and creative flexibility.

Midjourney (Distributed, core team in USA) – Midjourney v6

Accessible via Discord, Midjourney is known for its stylized, cinematic art and fast rendering. It’s especially popular with designers and content creators looking to generate visually arresting artwork for branding, storytelling, and concept design.

Google DeepMind (London, UK) – Imagen

Imagen is Google’s high-resolution text-to-image model, known for photorealism and clarity. While currently limited to select researchers, its influence is already seen in Google’s tools like Bard and AI-enhanced image searches—indicating big integration plans.

Runway (New York City, USA) – Gen-2

A leader in text-to-video technology, Runway’s Gen-2 allows users to generate short videos from text prompts or reference images. It’s particularly useful for content creators making dynamic B-roll, surreal footage, or experimental media content.

Meta (Menlo Park, USA) – Emu and Make-A-Video

Meta’s Emu model powers AI image generation in platforms like Instagram and Facebook. Make-A-Video focuses on turning text into video and could be huge for reels, ads, or immersive storytelling once it’s publicly deployed.

Stability AI (London, UK / San Francisco, USA) – Stable Diffusion XL

Available via platforms like Clipdrop and DreamStudio, SDXL is open-source and customizable, making it ideal for creators who want more control over image style, aesthetics, or need local/private generation without sending prompts to the cloud.

Pika Labs (San Francisco, USA)

– Pika 1.0

Pika allows users to generate and edit AI videos from text prompts directly in-browser or on mobile. It’s user-friendly and quickly evolving, perfect for creators looking to prototype animations, content intros, or stylized explainer clips.

Adobe (San Jose, USA) – Firefly

Integrated into Photoshop and Express, Adobe Firefly offers AI-generated images and text effects with a focus on commercial safety (trained on licensed images). Ideal for professional designers who want to stay within Adobe’s ecosystem.

Synthesia (London, UK) – AI Video Generator

While more focused on avatar-based videos, Synthesia can turn scripts into talking head videos, making it a game-changer for creators producing educational content, product explainers, or corporate communications without needing cameras or actors.

Luma AI (Palo Alto, USA) – Dream Machine (beta)

Luma’s Dream Machine focuses on photorealistic text-to-video generation. It stands out with smooth motion generation and depth—currently in beta, but already making waves for creators interested in 3D-style cinematic AI footage from minimal prompts.

Training Computers to Be Artists

Text-to-image and text-to-video technologies, exemplified by platforms like Sora and Mid Journey Stable Diffusion, represent groundbreaking advancements in artificial intelligence that blur the boundaries between language and visual content creation. These innovative systems leverage deep learning algorithms to interpret textual descriptions and generate corresponding images or videos with remarkable fidelity and realism. By encoding semantic relationships and contextual information from textual inputs, these AI models can synthesize rich, visually coherent representations that align with the intended narrative or description. Whether depicting fantastical landscapes, lifelike portraits, or dynamic scenes, text-to-image and text-to-video technologies offer a powerful tool for creators to bring their ideas to life in vivid detail.

At the heart of these advancements lies the fusion of natural language processing and computer vision techniques, enabling AI systems to comprehend textual prompts and translate them into visually compelling outputs. Through a process known as conditional image generation, text-to-image and text-to-video models learn to correlate specific words or phrases with corresponding visual elements, such as objects, scenes, or actions. By iteratively refining their understanding of textual inputs and optimizing image synthesis algorithms, these AI systems continually improve their ability to generate high-quality visual content that accurately reflects the semantics and nuances of the original text. As a result, text-to-image and text-to-video technologies not only streamline the creative process for artists and content creators but also open new avenues for interactive storytelling, immersive experiences, and personalized content generation in diverse fields ranging from entertainment and advertising to education and virtual reality.

How Do Generative AI Models Use Data?

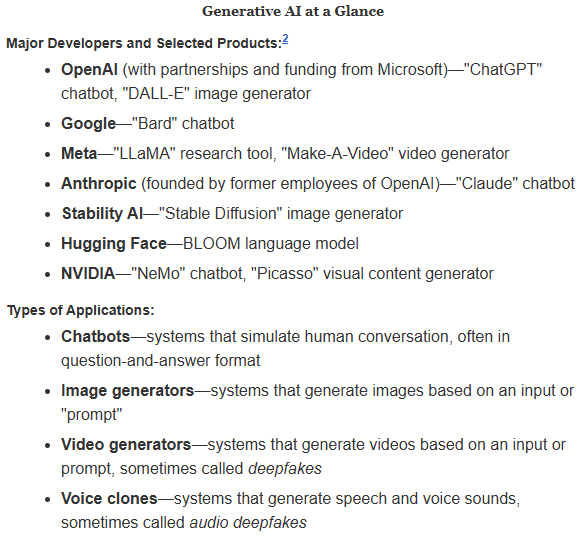

Data play a foundational role in developing and optimizing artificial intelligence. Generative AI models, in particular, depend on vast and diverse datasets to function effectively.

Key Terms

Training a model means feeding it information so it can detect patterns and relationships—this is typically done using what’s known as a training dataset. Once the model has learned from that dataset, many models can continue recognizing patterns and making predictions when exposed to new data.

Fine-tuning involves updating an already-trained model with new information or adjusting its behavior using additional, targeted data.

Generative models—especially large language models (LLMs)—require extremely large volumes of data. For instance, ChatGPT by OpenAI was trained using over 45 terabytes of text, much of it scraped from online sources. The model also included data from platforms like Wikipedia and collections of digitized books. GPT-3, a previous version, was built using around 300 billion tokens (fragments of words), and its architecture included 175 billion parameters—tunable elements that influence how the model interprets and generates content.

Critics argue that many of these models are built on data acquired in ways that invade user privacy, often without informing or compensating the original content creators. In some cases, models absorb sensitive personal data and can unintentionally reveal it during interactions. A blog post by Google AI researchers acknowledged that these massive datasets—frequently compiled from public sources—can include personally identifiable information (PII) like names, addresses, and phone numbers. Research has demonstrated that certain models may inadvertently surface private details contained in their training material.

AI tools built using these models are often integrated into commercial platforms or services. Businesses can license systems like ChatGPT for use in their products. For example, platforms such as Duolingo, Snapchat, and Khan Academy have partnered with OpenAI to include ChatGPT in their user experiences. However, individuals whose content helped train these tools may not be aware that their data contributed to commercial applications.

Regulators in some countries have begun to push back against such practices. Italy’s data protection authority, for example, temporarily banned OpenAI from processing user data from Italian citizens. The ban was lifted after OpenAI made adjustments—such as allowing users to request the removal of personal data in line with the European Union’s General Data Protection Regulation (GDPR).

Where Is the Data Sourced From?

Most AI companies are not transparent about what specific information goes into their training datasets. In generative AI, the bulk of training material is drawn from publicly accessible websites, often through automated web scraping. These scraped datasets are then packaged for internal use, resale, or open-source release.

Popular datasets like the Colossal Clean Crawled Corpus (C4) and Common Crawl are built using bots that systematically collect content from millions of websites. Similarly, AI image-generation models often use LAION—a massive dataset that includes billions of images and their text descriptions, scraped from the web. Some organizations also incorporate their own proprietary data when building these models.

These datasets can include all kinds of public internet content, including personal information, copyrighted works, and sensitive material. They may also include low-quality, offensive, or inaccurate data. Since many creators are unaware their work is being used, tools like “HaveIBeenTrained” have emerged to help individuals identify whether their images or writings have been pulled into these datasets. A 2023 investigation by The Washington Post and the Allen Institute for AI found that the C4 dataset included content from copyrighted websites and even data like voter registration records—raising questions about the ethics and legality of AI training practices.

This method of acquiring data continues to raise red flags about fair use and intellectual property rights. For a deeper legal analysis of these issues, see the CRS Legal Sidebar LSB10922, Generative Artificial Intelligence and Copyright Law, by Christopher T. Zirpoli.

What Happens to Data Entered Into Generative AI Systems?

There are growing concerns about how personal data shared with generative AI platforms—such as chatbots—may be stored, reused, or exposed. A person might discuss private medical information with a virtual assistant, unaware that this data could be retained for retraining models or for monetization by the platform.

These risks are especially significant for AI tools used in sensitive areas such as therapy, legal advice, financial guidance, or healthcare. Critics argue that generative AI systems should be more transparent, requiring clear consent from users and offering disclosures about how data is used, shared, and stored. This is especially crucial in settings where the expectation of privacy is high and the stakes are personal.

Examination of the impact of AI diffusion technology on traditional notions of artists’ property rights.

The advent of AI diffusion technology has fundamentally altered the landscape of artists’ property rights, challenging long-standing notions of ownership and control over creative works. Historically, artists have relied on copyright law to safeguard their intellectual property, granting them exclusive rights to reproduce, distribute, and display their creations. However, the rise of AI-generated imagery has introduced a new layer of complexity to this paradigm, blurring the distinction between human and machine authorship. As AI algorithms autonomously generate artworks based on learned patterns and data, the traditional model of individual authorship becomes increasingly tenuous, raising questions about the applicability of copyright protections in an era of algorithmic creativity.

Moreover, the democratization of image creation through AI diffusion technology has democratized access to artistic tools and resources, empowering individuals from diverse backgrounds to engage in creative expression. Whereas traditional art forms often require specialized skills and training, AI-driven platforms offer intuitive interfaces and automated processes that lower the barrier to entry for aspiring artists. This democratization of creativity has the potential to foster greater diversity and inclusivity in the arts, amplifying voices that have historically been marginalized or underrepresented. However, it also poses challenges to established hierarchies within the art world, as the proliferation of AI-generated artworks blurs the distinction between amateur and professional, challenging traditional notions of artistic merit and value.

Furthermore, the decentralized nature of AI diffusion technology complicates efforts to enforce artists’ property rights and combat infringement. Unlike traditional forms of piracy, which often involve identifiable individuals or entities engaging in unauthorized reproduction or distribution, AI-generated artworks can proliferate across digital platforms autonomously, making it difficult to attribute authorship or monitor for infringement. As a result, artists may find themselves grappling with a myriad of legal and ethical challenges in protecting their creations from unauthorized use or exploitation. In this rapidly evolving landscape, the need for innovative solutions and collaborative approaches to address the intersection of AI technology and artists’ property rights has never been more pressing.

Top Six Takeaways

1. Generative AI Models Require Immense, Often Unconsented Data

Training large language and image models demands vast datasets, often scraped from the internet without the explicit consent of creators, raising major ethical and legal concerns.

2. Personal Information Can Be Inadvertently Exposed

These massive datasets may contain sensitive, personally identifiable information (PII), which some models have been shown to leak or surface unintentionally during interactions.

3. Lack of Transparency from AI Developers

Most AI companies do not disclose the full scope of their data sources. Datasets like C4, Common Crawl, and LAION are built from sweeping, automated collection of public content with little oversight.

4. Your Data May Power Commercial AI Tools—Without Your Knowledge

Platforms like Snapchat, Duolingo, and Khan Academy have integrated AI models like ChatGPT, yet the people whose data helped train these tools are rarely aware or compensated.

5. Global Regulators Are Beginning to Push Back

Regulatory bodies—such as Italy’s Data Protection Authority—are starting to penalize AI companies for data misuse, forcing them to comply with stricter privacy laws like the EU’s GDPR.

6. Emerging Tools Empower Creators to Fight Back

New resources like “HaveIBeenTrained” allow artists and content creators to identify whether their work has been used in AI training datasets, helping them reclaim some control in this unregulated arena.

Conclusion

The convergence of AI technology and artistic expression presents both unprecedented opportunities and daunting challenges for creators, policymakers, and society at large. As AI-generated imagery reshapes the landscape of the art world, traditional notions of copyright, authorship, and ownership are being redefined.

The Isabella Stewart Gardner art heist serves as a stark reminder of the enduring value placed on physical artworks, while the rise of AI-driven creativity underscores the need for updated legal frameworks and ethical guidelines to safeguard artists’ property rights in the digital age. As we navigate this brave new world of algorithmic artistry, collaboration, innovation, and a commitment to preserving the integrity of creative expression will be essential to ensuring a vibrant and equitable future for the arts.

Acknowledgements

{1} Our unbiased report mentions numerous artificial intelligence innovators and their commercialized products and services. All registered trade marks and trade names are the property of the respective owners.

{2} Generative Artificial Intelligence and Copyright Law. Congressional Research Service. LSB10922 Christopher T. Zirpoli, Legislative Attorney.

{3} Generative Artificial Intelligence and Data Privacy. Congressional Research Service. R47569 Ling Zhu, Analyst in Telecommunications Policy. Laurie Harris. Analyst in Science and Technology Policy.

{4} The Isabella Stewart Gardner Museum, located in Boston, Massachusetts, is a unique art museum housed in a Venetian palace-style building, founded by Isabella Stewart Gardner, and features an eclectic collection of European, Asian, and American art, including paintings, sculptures, and decorative arts. Isabella Stewart Gardner was a prominent figure in the Gilded Age, known for her extensive travels and passion for collecting art from around the world. The museum is located at 25 Evans Way Boston, MA 02115. information@isgm.org

{5} James Joseph “Whitey” Bulger was the boss of the largely Irish Mob in Boston from the 1970s through the 1990s. His longevity and success in organized crime largely can be attributed to protection he received as a high-level FBI informant. Bulger was notorious for his readiness to use violence and especially murder to achieve his criminal goals. A “Southie,” or resident of the tough streets of South Boston, Bulger was attracted to street crime early in life, joining gangs and earning his first arrest at age 14. In the 1980s, Bulger started shaking down narcotics distributors and supplying weapons to the Irish Republican Army, which was fighting the British government in Northern Ireland. Investigators believe the paintings were shipped to Ireland as part of a deal with an IRA-affiliated gang after a shipment of weapons and ammunition was intercepted by the Irish navy.

Additional Information

About PWK International Advisers:

PWK International provides national security consulting and advisory services to clients including Hedge Funds, Financial Analysts, Investment Bankers, Entrepreneurs, Law Firms, Non-profits, Private Corporations, Technology Startups, Foreign Governments, Embassies & Defense Attaché’s, Humanitarian Aid organizations and more.

Services include telephone consultations, analytics & requirements, technology architectures, acquisition strategies, best practice blue prints and roadmaps, expert witness support, and more.

From cognitive partnerships, cyber security, data visualization and mission systems engineering, we bring insights from our direct experience with the U.S. Government and recommend bold plans that take calculated risks to deliver winning strategies in the national security and intelligence sector. PWK International – Your Mission, Assured.